Submitted by elijahmeeks t3_11esh1j in dataisbeautiful

Comments

[deleted] t1_jafw4k2 wrote

[removed]

elijahmeeks OP t1_jafu0ax wrote

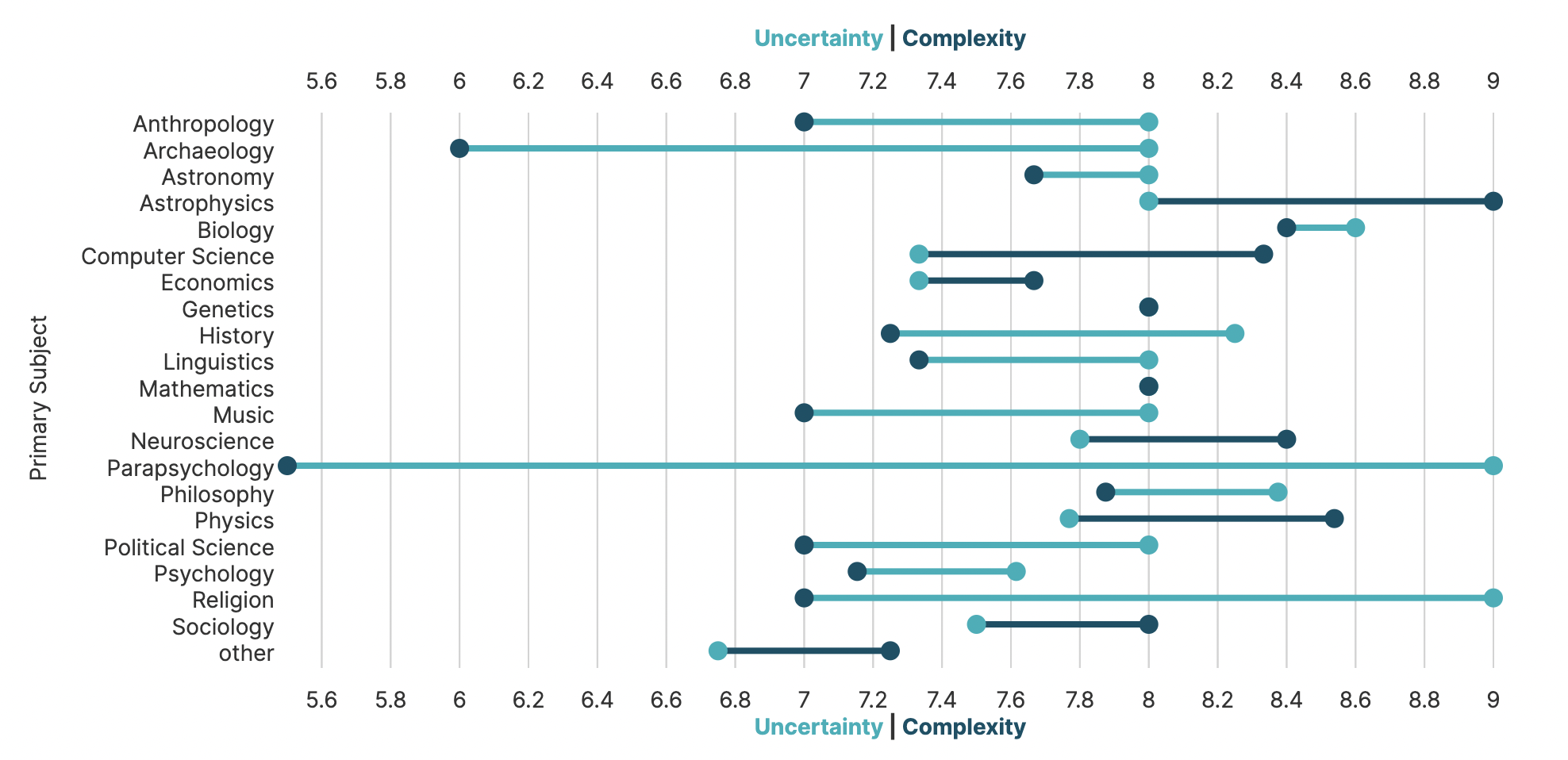

I asked ChatGPT to give me a list of topics that were too complex or uncertain for it to discuss without being misleading and made a bunch of charts out of the results. This is a nice overview Dumbbell plot of the subject areas of the 100 topics that ChatGPT gave me but if you want to see some treemaps and word clouds you can check out the whole thing here: https://app.noteable.io/f/8e355d65-cd94-4afe-bb81-b9aa24a457dc

I used a Noteable notebook, which is Pythn+SQL. The data visualization uses their built-in tool DEX, which uses javascript with Semiotic and D3 under the hood.

Edited to add: This is a live notebook that you can interact with, explore, and export the dataset that I had ChatGPT create for me.

Winterstorm8932 t1_jafwc1e wrote

How is complexity judged, especially considering how so many of these subjects overlap?

elijahmeeks OP t1_jafwspx wrote

The short answer: These ratings come from ChatGPT.

Long Answer: You'd have to read through the full exploration to see the entire picture. Basically, I asked ChatGPT to give me 100 topics too uncertain or complex to discuss without misleading users, and to give me subject areas for them and ratings on complexity and uncertainty. So this plot shows the aggregate complexity/uncertainty value of those 100 topics by subject area of the topic. You can see it all in much more detail in the notebook I link to in my comment.

aluvus t1_jag1qou wrote

ChatGPT is not a data source, even for data about itself. There is no underlying "thinking machine" that can, say, meaningfully assign numeric scores like this. It is essentially a statistical language machine. It is a very impressive parrot.

There is nothing inside of it that can autonomously reach a conclusion that a topic is too difficult to comment on; in fact, many people have noted that it will generate text that sounds very confident and is very wrong. It does not have a "mental model" by which it can actually be uncertain about claims in the way that a human can.

The first question you asked (100 topics) is perhaps one that it can answer in a meaningful way, but only inasmuch as it reveals things that the programmers programmed it not to talk about. But the others reflect only, at best, how complex people have said a topic is, in the corpus of text that was used to train its language model.

Regarding the plot itself, I would suggest "uncertainty about topic" and "complexity of topic" for the labels, as the single-word labels were difficult for me to make sense of. I would also suggest reordering the labels, since complexity should be the thing that leads to uncertainty (for a human; for ChatGPT they are essentially unrelated).

elijahmeeks OP t1_jag2y5a wrote

A lot of very confident points that you've posted, you'd do well as an online AI.

- ChatGPT is most definitely a data source now and (along with similar such tools) will be used as such more and more going forward, so it's good to examine how it approaches making data. Whether it "should" be able to assign meaningful numerical scores to things like this, it sure was willing to.

- Agree and it's even more concerning how it does it with data. Take a look at the end of the notebook and you'll see at the end how it hallucinates with the data it gives me. Again, people are going to use these tools like this, so we should be aware of how it responds.

- I think it's revealing not just of the biases of the corpora and creators, but also of the controls to avoid controversy that makes it evaluate certain topics as more "uncertain".

- Good point. I struggle with the way this subreddit is designed to showcase a single chart since so many of my charts are part of larger apps or documents.

aluvus t1_jag4p3r wrote

The fact that people misuse it as a data source is not an excuse for you to knowingly misuse it, doubly so without providing any context to indicate that you know the data is basically bunk. This is fundamentally irresponsible behavior. Consider how your graphic will be interpreted by different audiences:

- People who do not know how ChatGPT works (most people): wow, the AI can figure out how complex a topic is and how certain it should be about it! These are definitely real capabilities, and not an illusion. Thank you for reinforcing my belief that it is near-human intelligence!

- People who do know how ChatGPT works: these numbers are essentially meaningless, this graphic will only serve to mislead people

> Whether it "should" be able to assign meaningful numerical scores to things like this, it sure was willing to.

Yes, so will random.org. Should I make a graphic of that too? Perhaps I could imply that it is sentient.

yourmamaman t1_jafyyvw wrote

What would be your hypothesis on the reason the fields like parapsychology are different than say computer science in this context. (Bars are flipped, and large difference)

elijahmeeks OP t1_jag0zz8 wrote

I think it reflects the inherent biases in the design, training and implementation of the ML algorithms that drive ChatGPT: Science topics are considered "less uncertain but more complex" because its source material, creators and developers believe that, but also it has controls in place to avoid saying controversial things and topics like ghosts, history, art & religion are all much more likely to have controversy and therefore be more "uncertain" to ChatGPT when it comes to giving answers.

[deleted] t1_jafzqys wrote

[deleted]

[deleted] t1_jag0jat wrote

[removed]

Accomplished-Owl3330 t1_jag08aq wrote

Sorry if this come across as a stupid question, but how are the scales and the intervals determined?

elijahmeeks OP t1_jag0qj8 wrote

Not a stupid question, it's using the "nice" scales functionality in D3 scales. because the low end doesn't land on a "nice" value it's hidden (which can be really frustrating sometimes). The chart is generated via a dataviz library I created called Semiotic which uses D3 under the hood for things like this. You can see the chart interactively on the original notebook that I link to in my comment if you want to play with it.

Accomplished-Owl3330 t1_jag1a4r wrote

Ahh got it. Thanks for taking the time!

debunk_this_12 t1_jag233w wrote

What does uncertainty and complexity mean in this context?

[deleted] t1_jag2bdc wrote

[removed]

elijahmeeks OP t1_jafu4ac wrote

Dumbbell Plot or Barbell Plot? Will we ever figure out this, the most critical question in modern data visualization?

[deleted] t1_jafvqv9 wrote

[removed]

[deleted] t1_jag6koh wrote

[removed]

fortnitefunnies3 t1_jagp4kh wrote

I can’t really tell which correspond to which

harrrrrrjinderrrrr t1_jafvsgn wrote

Interesting! What was the data source you used?